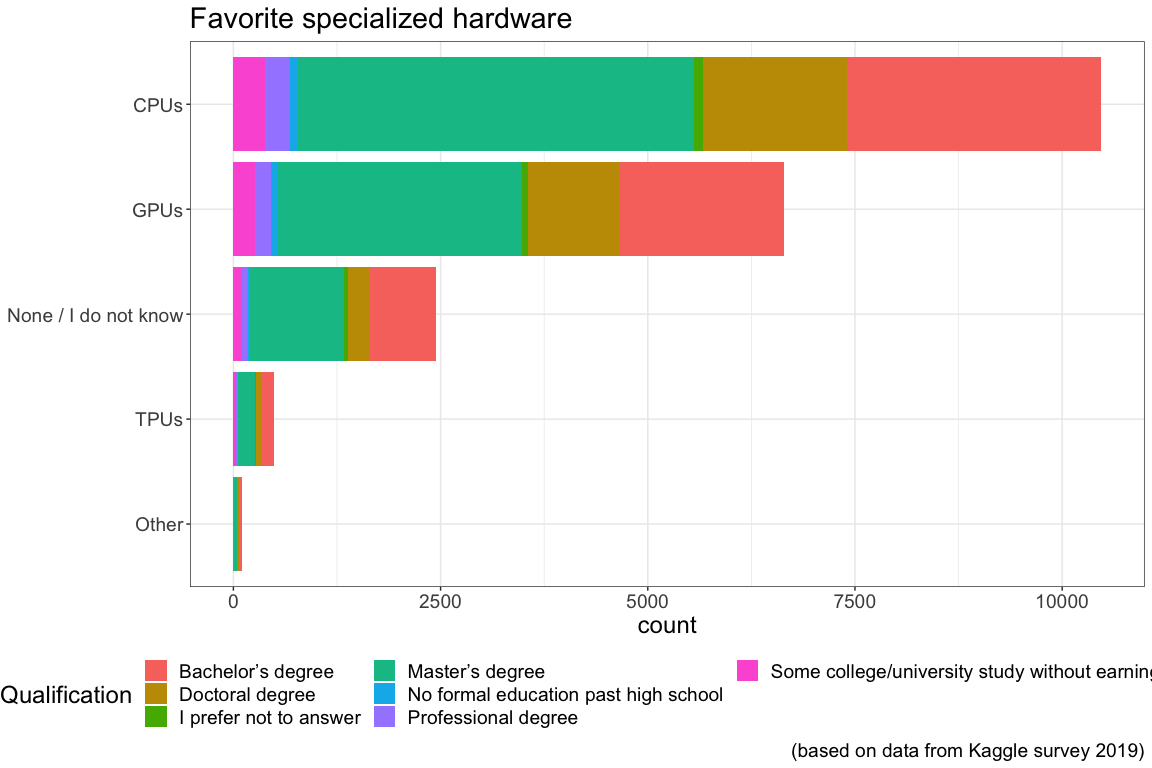

Chapter 18 Favorite specialized hardware

Favorite specialized hardware are shown in the graph below

The last Qualification which is cut off in the legend in the plot above reads “Some college/university study without earning a bachelor’s degree”

The last Qualification which is cut off in the legend in the plot above reads “Some college/university study without earning a bachelor’s degree”

- CPU => Central Processing Unit

- Performs basic arithmetic, logic, and input output instructions

- Heart of every computing device

- GPU => Graphics Processing Unit

- Optimized processor for graphics

- Very fast matrix multiplication => speeds up neural network computation

- TPU => Tensor Processing Unit

- A tensor processing unit (TPU) is an AI accelerator application-specific integrated circuit (ASIC) developed by Google specifically for neural network machine learning.

- Edge TPU

- 4 TOPs1

- 2W

In Wei, Brooks, and others (2019) a comparison of the three processors with respect to machine learning capabilities is given:

• TPU is highly-optimized for large batches and CNNs, and has the highest training throughput

• GPU shows better flexibility and programmability for irregular computations, such as small batches and non- MatMul computations. The training of large FC models also benefits from its sophisticated memory system and higher bandwidth.

• CPU has the best programmability, so it achieves the highest FLOPS utilization for RNNs, and it supports the largest model because of large memory capacity.

References

Wei, Gu-Yeon, David Brooks, and others. 2019. “Benchmarking Tpu, Gpu, and Cpu Platforms for Deep Learning.” arXiv Preprint arXiv:1907.10701.

Tera Operations Per Second↩